1.20版本TKE集群使用nfs-subdir-external-provisioner挂载

|

|

2019-05-17

2019-05-17

使用tke的时候,很多时候会用到共享存储,tke默认支持的共享存储有cos和cfs(类似于nfs),一般使用cfs的比较多,但是现在tke的cfs组件现在还不支持共享cfs实例,也就是说你自动创建pvc,每个pvc就会用到一个共享文件系统,这样比较浪费资源,其实我们可以在tke上使用自建nfs来进行存储,k8s的1.20之前版本都是推荐使用K8S NFS Provisioner,但是现在这个组件不维护了,并且不兼容1.20以后版本的k8s集群。

官方推荐使用NFS Subdir External Provisioner来作为动态分配组件,下面我们来介绍下如何在tke的1.20版本上部署使用nfs-subdir-external-provisioner,这里的部署方式同样适用于自建的k8s。

nfs-subdir-external-provisioner的github地址:https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner

1. 搭建nfs服务器

搭建nfs服务器,这里就不说明了,可以参考之前的文档的第一步来进行部署。

https://cloud.tencent.com/developer/article/1699864

2. 部署nfs-subdir-external-provisioner

部署nfs-subdir-external-provisioner有2种方式,一种是helm,还有一种是直接用原生的yaml部署。

2.1 helm部署

helm部署,可以参考如下命令进行部署

然后看下是否会在nfs服务器的存储目录找到,可以发下已经同步过来的,nfs的存储目录默认会生成和pvc对应的子目录挂载

$ helm repo add nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner/ $ helm install nfs-subdir-external-provisioner nfs-subdir-external-provisioner/nfs-subdir-external-provisioner \ --set nfs.server=x.x.x.x \ --set nfs.path=/nfs/data

nfs.server是nfs的的服务器地址

nfs.path是存储目录

2.2 yaml部署

这里我们默认将组件部署在default命名空间下,如果需要部署到其他ns,需要修改yaml的命名空间配置,这里首先部署下rbac文件

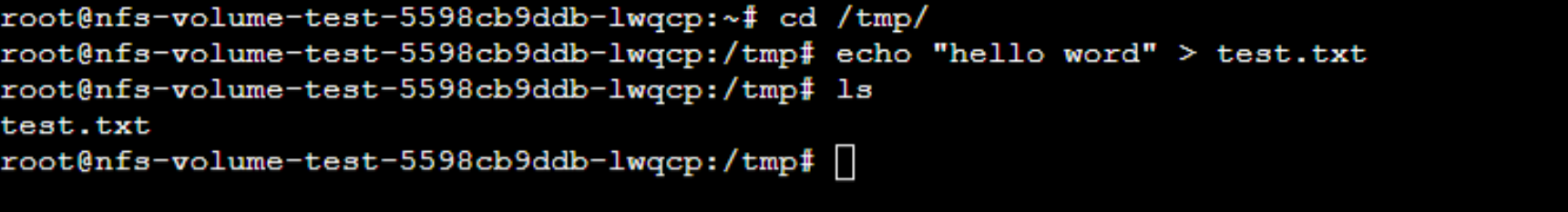

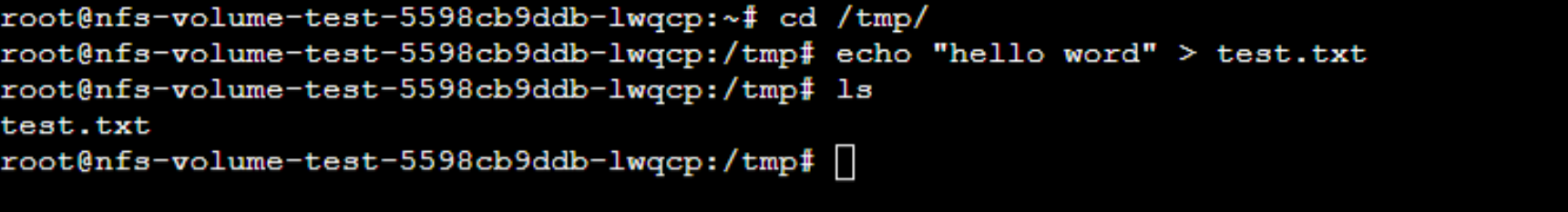

我们测试下在容器内创建一个文件apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["nodes"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io

然后我们部署下NFS-Subdir-External-Provisioner的deployment

apiVersion: apps/v1 kind: Deployment metadata: name: nfs-client-provisioner labels: app: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner image: ccr.ccs.tencentyun.com/niewx-k8s/nfs-subdir-external-provisioner:v4.0.2 volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: k8s-sigs.io/nfs-subdir-external-provisioner ## Provisioner的名称,以后设置的storageclass要和这个保持一致 - name: NFS_SERVER ## NFS服务器地址,需和valumes参数中配置的保持一致 value: 10.0.8.6 - name: NFS_PATH ## NFS服务器数据存储目录,需和valumes参数中配置的保持一致 value: /nfs/data volumes: - name: nfs-client-root nfs: server: 10.0.8.6 ## NFS服务器地址 path: /nfs/data ## NFS服务器数据存储目录

接下来我们创建下StorageClass

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: nfs-client annotations: storageclass.kubernetes.io/is-default-class: "false" # 是否设置为默认的storageclass provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "false" # 设置为"false"时删除PVC不会保留数据,"true"则保留数据 mountOptions: - hard # 指定为硬挂载方式 - nfsvers=4 # 指定NFS版本,这个需要根据NFS Server版本号设置,可以nfsstat -m查看

3. 测试创建pod通过pvc挂载

首先创建下pvc

kind: PersistentVolumeClaim apiVersion: v1 metadata: name: test-claim spec: storageClassName: nfs-client accessModes: - ReadWriteMany resources: requests: storage: 1Mi

创建deploy挂载这个pvc

apiVersion: apps/v1 kind: Deployment metadata: labels: k8s-app: nfs-volume-test qcloud-app: nfs-volume-test name: nfs-volume-test namespace: default spec: replicas: 1 selector: matchLabels: k8s-app: nfs-volume-test qcloud-app: nfs-volume-test strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 0 type: RollingUpdate template: metadata: labels: k8s-app: nfs-volume-test qcloud-app: nfs-volume-test spec: containers: - image: nginx:latest imagePullPolicy: Always name: nfs-volume-test resources: {} securityContext: privileged: false volumeMounts: - mountPath: /tmp name: vol dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: {} terminationGracePeriodSeconds: 30 volumes: - name: vol persistentVolumeClaim: claimName: test-claim

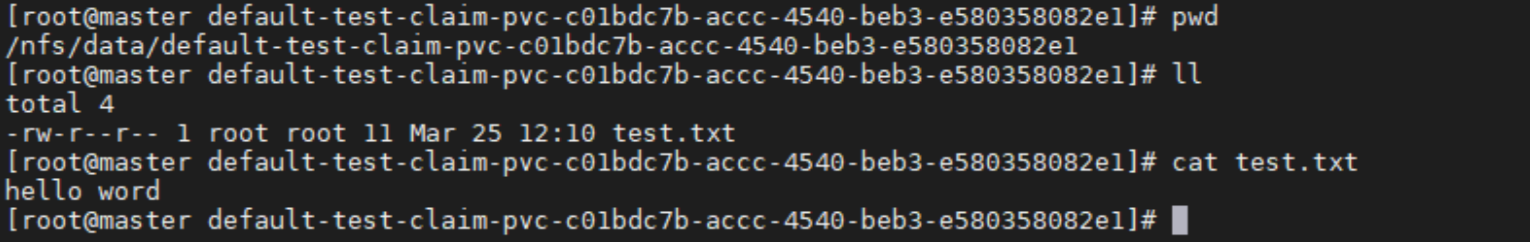

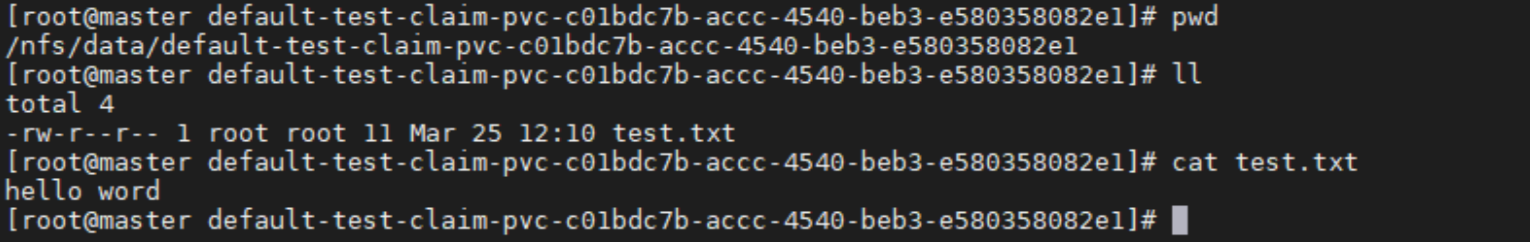

然后看下是否会在nfs服务器的存储目录找到,可以发下已经同步过来的,nfs的存储目录默认会生成和pvc对应的子目录挂载

创建StatefulSet通过自动生成pvc的方式挂载下看看

apiVersion: apps/v1 kind: StatefulSet metadata: name: web labels: app: centos qcloud: web spec: selector: matchLabels: app: web replicas: 2 volumeClaimTemplates: - metadata: name: test annotations: volume.beta.kubernetes.io/storage-class: "nfs-client" spec: accessModes: [ "ReadWriteMany" ] resources: requests: storage: 1Mi template: metadata: labels: app: web spec: containers: - name: nginx image: nginx imagePullPolicy: IfNotPresent volumeMounts: - mountPath: "/etc/nginx/conf.d" name: test

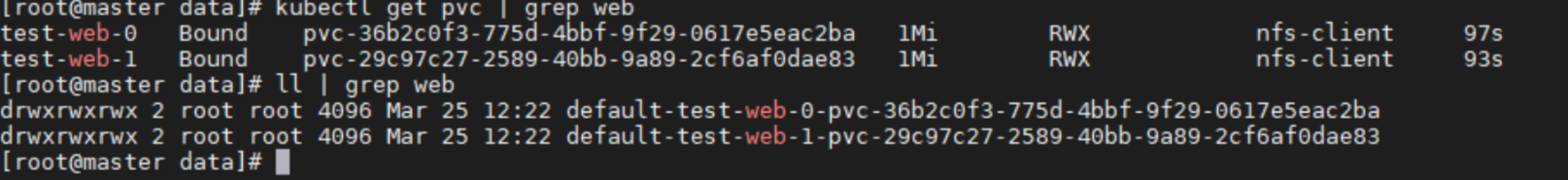

创建后,会自动生成2个pvc,然后nfs上也会生成对应的存储目录

编辑:航网科技 来源:腾讯云 本文版权归原作者所有 转载请注明出处

关注我们

微信扫一扫关注我们

微信扫一扫关注我们

推荐阅读